ELI5 #1: How will content owners get paid for data used to train AI?

If AI is the latest arms race, data is its ammo. There are a number of ways that AI companies could pay publishers. Here's some speculation about what these business models might look like.

hey :) I’m Anant and I’m another 20-something conjecturing about technology. My goal is to take complicated ideas, break them down, and develop a perspective. I’m writing to hold myself accountable to learning — hopefully you’ll find it interesting. Maybe you won’t. Thanks for reading either way.

hey everyone 🙌,

Thanks for joining! In this post, I’m going to explore data licensing for AI, a topic that’s gotten a lot of coverage in the last months. I’ll do some work to get the casual techie up to speed, but if you know this topic well, then skip down to the part where I conjecture about what types of business models might work here (Part A):

OpenAI made waves a few weeks ago when it released its latest model, GPT-4o, which seems more like a… person than any other model on the market. The twitter-verse (X-verse doesn’t have the same ring to it) immediately started drawing parallels to Her, a 2013 flick in which a man falls in love with AI (played by Scarlett Johansson). Over the last couple weeks, the ensuing drama has given the Kardashians a run for their money (am I the only one pushing for an AI reality TV show??). A quick list of events that have transpired in the last weeks: A disgruntled former board member accused Sam Altman of lying to the board. The entire OpenAI safety team quit. Like literally all of them. And ScarJo threatened to sue OpenAI, alleging that the new model had a voice that sounded an awful lot like her own. Folks, get your popcorn.

Things are heating up here in AI-land, and any of the above controversies could be a post in itself, but I want to take this opportunity to talk about the third of these mishaps, which touches on the ongoing debate about “fair use” — aka how AI models ingest copyrighted content and appropriately credit its owners.

OK. Let’s start from the beginning: Generative AI is fundamentally changing the way that content is created and consumed, siphoning consumers away from the internet as we know it and toward AI-generated content. Now that Google serves up AI-generated summaries on search, I find myself clicking into web pages less and less (don’t you??). Anecdotally, my friend just told me that search traffic to her travel blog decreased by 35% because people didn’t click through. Ummmmm??

The way that these models improve is more and better data. We’ve gotten to the point where these models have sucked up just about every word on the internet, and they’re going to run out of training data in a few years (see graph below). A recent New York Times article describes tech companies surreptitiously changing user agreements, boot-strapping data transcription from video to text, and leveraging AI models to create synthetic data — all in the pursuit of more words to train on.

If AI is the latest arms race, data is its ammo (GPUs —the hardware that processes AI workflows— too, probably).

What’s the problem with using training data?

But who owns that data? Well, all kinds of people — lots is in the hands of tech companies (e.g., YouTube and Facebook), who in exchange for hosting data, have user agreements enabling them to use the data (mostly) as they please. Then, of course, there are traditional publishers (like the Wall Street Journal), whose content — stories, movies, books — is subject to more stringent copyright laws.

You’ve probably followed that content creators (from solo artists to large media publications) are pretty unhappy with AI companies freely wielding their copyrighted content, threatening to eat (at least some of) their lunch. For example, we saw uproar in late 2022 when Lensa’s AI-generated art began making its way around the internet — everybody and their mom had an “avatar” (turns out, AI can make you hot 🥵). Artists were pissed that the styles they had created were being mimicked by an AI model that was producing images for cheap. They felt like their work had been stolen. And since then, the opposition has continued to mount: Content owners are beginning to use their data leverage to stop AI from ingesting content. The New York Times, for example, filed a lawsuit alleging that millions of its articles were used to train LLMs and demanding compensation for their use.

There have been early technology attempts to prevent AI bots from scraping copyrighted work — for example, Verify and Spawning AI are building protocols to ensure only licensed AI agents are able to access data. Steg.AI and Imatag help creators tag their content with watermarks. And Nightshade, a UChicago project, “poisons” image data to disrupt AI training. Hmmm… who knew you could poison robots too? We’ll see how these products take hold.

As these challenges go to court, the question will be: Is the use of copyrighted material to teach AI models a “fair use” under copyright law? If yes, no compensation would be owed to the owner of the original work. At present, the regulatory landscape is… dynamic, to put it nicely (aka no one knows what the heck regulators are gonna do) — see this new bill that was recently proposed by CA Congressman Schiff requiring AI companies to report if they used any copyrighted training data. It’s expected that these legislative, regulatory, and judicial decisions will resolve themselves only on the timeline of several years, leaving the industry without clear guidance about whether model builders should pay publishers for ingestion of their work.

What might data monetization look like?

But AI is happening now, and many say it would be “impossible” to create useful AI without copyrighted material. So what might data licensing look like?

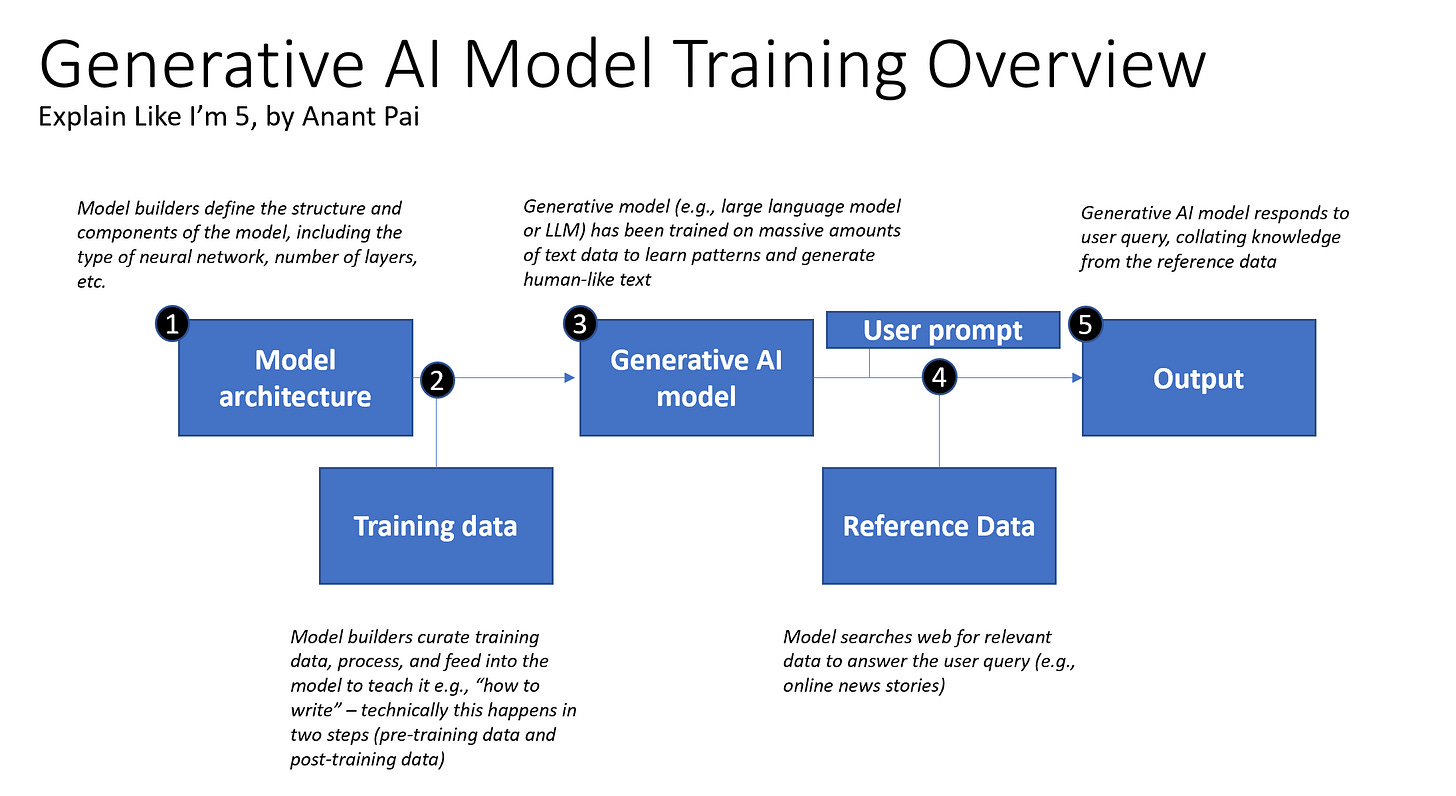

Before we get there, let’s do a quick detour to talk about the multi-stage process by which generative AI models are trained (see image below). To start, there are at least two fundamentally different types of data; let’s call them: (1) training data and (2) reference data (also sometimes called “external data”). Training data is the core data that is fed through the model for it to learn patterns and relationships in order to make predictions — in generative text AI models, these are the datasets that the model will use to learn how to form sentences. In doing so it will also pick up facts about the world that it will inherently know. Think of this as the model reading a textbook about “how to write”, which contains real-world writing samples. This process technically happens in two steps (pre-training and post-training), but let’s just keep this simple. By contrast, reference data is not contained within the model; it is scraped from the internet and other real-time sources and fed into the model as part of the prompt. This external data can provide supplementary information that the model will use beyond the training data. Think of this as the articles a writer consults to compose a new work (see image below).

So far, questions of fair use have focused primarily on training data, but as people build apps on top of large language models (or LLMs) and AI agents begin crawling the internet retrieving data in real time, I expect there to be questions about reference data as well.

As we think about time horizons, there are three different points in time at which AI data might be monetized.

A. What if we monetized before training?

When large language models like ChatGPT are trained, they’re trained on data as of a certain date — for example, until less than a month ago, if you asked free ChatGPT about today’s weather, it would say something like “I’m sorry. I don’t have access to real-time data… I was last trained on data available up until January 2022.” That version of ChatGPT had training data, but no ability to access reference data on the live web.

As a way of sidestepping any of the legal gray area, we’re starting to see licensing deals where AI companies are paying content owners for data to train their models. For example, Google AI and Reddit reached a $60M deal whereby the social media platform’s content would be used to train Google data; Open AI has been incredibly active in plowing forward with these bilateral agreements as well, most recently with News Corp (the parent company for the Wall Street Journal), The Atlantic, Reddit, and others. These deals have, for the most part, been bilateral: one AI model builder pays one content owner for the non-exclusive rights to use the data. We don’t yet have a lot of context on exactly what the rules of that use are, but you can imagine that it took lots of lawyerly back and forth to define it. This might make sense for these large publishers who have lots of data, but what about the mom and pop content creator? They get left out of this.

In this scenario, data becomes a fixed cost: the price of the data is defined up front and fed into the model wholesale to improve its quality.

B. What if we monetized while scraping?

Rather than blanket licensing agreements covering large corpuses of data, one alternative is to pay for the data in an “on demand” format. You might imagine, for example, a two-sided marketplace with, on one side, publishers that have content rights, and, on the other side, AI companies who want to purchase training data. Based on their needs, AI companies might buy access to the datasets that they need, with data quality, quantity, and “uniqueness” being potential determinants of cost.

This works almost as well as bilateral data licensing agreements for training data, but also sets the stage for iterations of “real time” AI, in which agents are scraping the live web for reference data. Take, for example, the AI-native search engine, Perplexity or the most recent releases of ChatGPT (powered by Bing Search), which leverage, in the background, foundational models to compile answers to user queries using data on the live internet. This allows models to, for example, answer your question about today’s weather. Let’s say a user wants information that is included in a New York Times article. A tool like Perplexity, may, taking a number of inputs about how highly it can monetize its search output and how relevant the information seems to be, be willing to pay a small licensing fee to the data owner to access that article. You might even imagine a world in which users pass their credentials into a wallet, whereby the AI model could access the New York Times if the end user is a subscriber.

There are a few companies building with this vision— Tollbit, which is calling itself “Spotify for data” and HumanNative.ai, are both working to become this marketplace. Such business models put control back in the hands of creators, and shift us away from data as a fixed cost and toward data as a variable cost. Since it’s impossible to know if the information will be useful at the time of data “purchase” (the agent doesn’t know what’s on the other side of the paywall), data scales based on the likely usefulness of the information in the data set. It’s like when you hit a paywall for an article you’re not sure will have the information you’re looking for.

C. What if we monetized at output?

Monetizing even later, at output, would align data usefulness most closely with cost. You might imagine that on top of an LLM’s output, you devise a mechanism for determining how much each underlying source was used — e.g., this output was 20% based on info from the NYT and 10% based on information from Politico. Rather than just listing “which sources were consulted”, you can imagine some way of knowing “how much was this source consulted in generating this output?” Using that result, you can figure out a fair way to distribute proceeds to the owners of the underlying content.

It’s worth mentioning that given current model architectures, it’s challenging to identify attribution for training data and much easier to identify attribution for reference data. For training data, attribution isn’t precisely defined — it is impossible to know, without looking at the exact data set that was initially fed in, which sources the model used to learn. Even still, we may be able to come up with proxies that reflect consensus on what is “good enough”. On the flipside, for reference data, models are already able to list sources, making attribution easier.

Monetizing on this horizon would make data a true variable cost — only once an AI model has decided that some content is of high enough quality that it is worth serving up to the user is the content owner ultimately paid, most closely aligning the incentives of model-builders and content owners. Both are rewarded when high quality data results in high quality output.

Additionally, monetizing at output may avoid the “fair use” question altogether — content owners are only paid if the output is deemed sufficiently similar to the copyrighted work. Think of this being like if a writer accidentally plagiarizes their source material, then the credit is appropriately given to the original author.

What are the ramifications of increasing data costs?

At present, data ingestion has been free (or relatively cheap— a couple million dollar data licensing contract is a drop in the bucket compared to compute costs). Changing that fact and charging for data consumption would have implications for AI companies and publishers alike.

For AI companies, increased data training costs would present a significant change to the cost structure of their businesses, eroding margins. In fact, lawyers for AI companies are arguing that paying for training data would torpedo the business model altogether — it’s just not financially viable, they say. It’s hard to decipher, outside in, whether or not that’s true, but it’s not so surprising they’re saying that (and all of this other stuff about why they shouldn’t pay publishers)

For publishers, increased data costs could go in either direction. On one hand, increased data costs might secure pay for high quality content, which then incentivizes more high quality content creation. Or, on the other hand, pay-walling and shielding data use might present a distribution challenge to publishers — if AI companies don’t reference their work, users of those AI tools might not find them.

Concluding thoughts

It’s hard not to wonder if we’re working on a problem that doesn’t need a solution, if maybe we’re trying to jump aboard a ship that’s already left harbor. It’s entirely possible AI models have gotten to the point where they no longer need human-created training data. Many, including OpenAI founder, Sam Altman, insist that, for example, ChatGPT-4o can generate all the data needed to train ChatGPT-5. This view has its sceptics, who point to AI hallucination and contend that with lower quality, AI-generated training data, it will be even harder to see leaps in model improvement, but it’s certainly possible that the cat is already out of the bag, and I suspect it would be hard to backpay publishers for models already trained. Reference data too, I suspect, will continue to be a topic of contention, and the likely truth is that different data should be monetized in different ways, making room for many of the above business models.

Thinking forward to the legal battle ahead for publishers and creatives (what happens to Scarlet Johansson’s likeness??): maybe having a mechanism to pay publishers makes it more likely they will get paid. In other words, maybe creating a technology that enables monetization of data is more likely to result in policy decisions requiring AI companies to pay publishers, since there would already be a solution.

We’re working with an iconic founder thinking through a lot of the above. If you’re a publisher grappling with these questions, a developer with opinions about data monetization, or a policy expert thinking about fair use in the era of AI, I’d love to be in touch. 🙂

If you liked this, check out these articles by ppl much smarter than me:

Anything by Nick Vincent (here are a couple good ones: 1, 2)

New York Times: How Tech Giants Cut Corners to Harvest Data for AI

Epoch AI: Will We Run Out of ML Data? Evidence From Projecting Dataset Size Trends

Axios: TollBit Raises $7M to solve the AI vs. Publisher Conflict

The Guardian: New Bill Would Force AI Companies to Reveal Use of Copyrighted Art

Futurism: AI Loses Its Mind After Being Trained on AI-Generated Data

Hey Anant! Great article!

It got me thinking if specific high quality training data would be more valuable and thus economically viable for smaller curated models rather than the big providers (e.g., OpenAI). With GPT-4 being trained on trillions of tokens could be hard for a data provider like NYT to make sustainable revenue; however, if instead it was easy to license NYT data to produce a model trying to be an expert essay editor maybe that could work?? Also, is the future business model of NYT and other content creators going to be similar to Reddits shift charging for data they produce?